At Criteo, machine learning lies at the core of our business. We use machine learning for choosing when we want to display ads as well as for personalized product recommendations and for optimizing the look & feel of our banners (as we automatically generate our own banners for each partner using our catalog of products). Our motto at Criteo is “Performance is everything” and to deliver the best performance we can, we’ve built a large scale distributed machine learning framework, called Irma, that we use in production and for running experiments when we search for improvements on our models.

Fig 1: Logo of our Prediction team; photo credits Baba from Dragon Ball

The problems we solve

In the past, performance advertising was all about predicting clicks. That was a while ago. Since then, we’ve moved from predicting rare, binary events (clicks) to predicting very rare events (sales) and continuous events (sales amounts), all of them being quite noisy. Today, we not only predict the expected gains of showing an ad for real-time bidding, but also optimize the look & feel of our banners from a pretty much infinite set of possibilities; e.g., we optimize the layout and the color of our banners. We’ve also built a real-time recommender system to decide which products to display once we’ve won a bid. Our solution to all these problems lies in Irma.

Just to give you an idea of the scale we are dealing with: our ads are seen by 1B+ people every month, we deal with 30B bid requests per day worldwide (500K predictions per sec, leading to 15M predictions per sec, in peak traffic). We typically have 10 ms or less to answer a request, that is to predict whether or not we want to display an ad for a given user, to choose the products we want to show (from millions of possible products) and to decide on the optimal look & feel of the banner.

|

|

Fig 2: Typical Criteo banner ad

It’s all about the data

First of all, “it’s all about data”. We collect more than 25TB of raw data every day worldwide; this is our training data! That’s a *lot* of data. It’s actually so large that we decided to make it available to the research community. After releasing the Kaggle contest dataset in September of 2014, we recently released a new dataset with over 4 billion lines and over 1TB in size. This is the largest public machine learning dataset ever released. To crunch this data and train our predictive models, we’re using many thousands of cores and dozens of TB of RAM hosted by several Hadoop YARN clusters located throughout the world. They run 24/7, 365 days a year.

Once pre-processed, a typical training set will contain 500M examples and millions of unique features. We usually learn a new model in less than an hour using a few hundred cores – after lots of hard work to make our code as fast as possible.

Actually, no… (distributed) algorithms matter too

We’re using these resources to continuously train machine learning models at scale (offline part) and to do real-time predictions using trained models (online part). From a machine learning perspective, we rely, in most cases, on an L-BFGS solver initialized with SGD. L-BFGS has this nice property that it can be very easily parallelized by distributing the gradient computation across the nodes. To do the distribution, we tried different approaches such as Hadoop AllReduce or Apache Spark, which allow to iterate multiple times over the data (for, e.g., computing the gradient) without writing data to HDFS as required if we would do multiple Hadoop reduce operations. Note though that Irma is not only about standard L1 or L2 regression using distributed SGD and L-BFGS. We have also implemented and use more advanced methods such as, e.g., transfer learning, embedding’s or learning to rank.

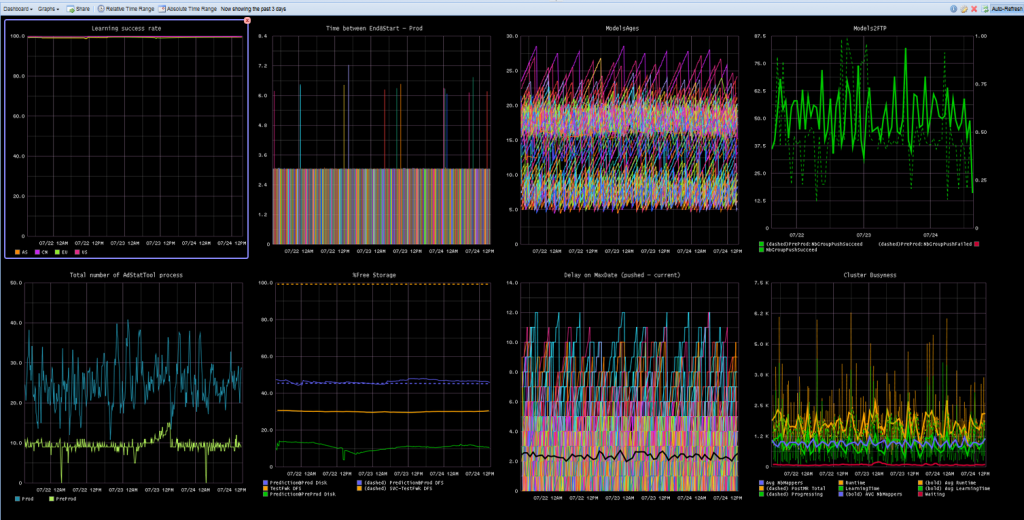

Moreover, fault-tolerance is deeply embedded in our infrastructure at Criteo, and especially for a project such as Irma, which lies at the heart of our Engine. Irma is built upon open source software such as Hadoop YARN and Zookeeper to help us be as fault-tolerant as possible. This is a pretty difficult problem on which we are still working although we have been pretty successful at ensuring that the number of failed learning jobs stays at very small and acceptable levels despite the large increase in the amount of data we use as well as the number of learning jobs we run every day. We run unit tests and large non-regression tests on a daily basis, at scale. We monitor our jobs on the cluster using many, many (many) graphs (we’ve recently switched from graphite to grafana) displayed on large-scale displays throughout our offices. Performance is everything, and we look at it closely!

Fig 3: At Criteo, we like screens full of monitoring graphs

The right metrics

As many have learned in the community, using the right metrics is key. Obviously, we are fond of the classical metrics found in the literature (RMSE, log loss…). In practice, they give us some insight into our experiments, but fall short of providing a complete analytical picture. Therefore, we enrich them with more elaborate in-house metrics that belong to our secret sauce. Combined with offline experiments, we use them extensively to explore the massive space of possible algorithms and solutions, and decide on which solution we want to focus online AB testing. Offline and online experiments are the two pillars of our testing strategy: offline testing is fast, cheap, and efficient for wide exploration; online testing is expensive but has the ultimate word on whether the new solution is better than what we currently have in production.

The goal of these metrics isn’t only to improve the intrinsic performance of Criteo’s technology however. We want to increase the value both for Criteo and for our clients. In addition, we also want to make sure that the increments we bring aren’t just a “flash in the pan”, but will stand the test of time. For these reasons, we’ve integrated a new set of metrics into our tools and analysis, that incorporate the notion of value added to our partners both on the short-term and on the long-term. We use these metrics to decide whether a specific evolution should be rolled out or not.

To run all these experiments, we’ve designed and built a centralized testing framework. This framework runs at scale in one of our data centers and is designed to handle a massive number of experiments in parallel. It is being used by just anyone working on improving our models, whether they are business analysts testing a new feature, or hard-core machine learning developers building a brand new learning method.

Looking forward

This is where we are today. But where are we heading to? At Criteo’s R&D we crawl the full spectrum of solutions from disruptive, fundamental research to smaller, incremental steps. We have a dedicated Research team that tends to focus on the former, but in fact, researchers and engineers work in a tightly coupled way.

Problems we are looking at include:

- new evaluation methods: we are currently looking into an offline AB testing capacitybased on recent work on causal inference in learning systems in the machine learning community; we are also investing heavily in our test framework and visualization tools.

- new algorithms: we are designing the new generation of learning algorithms beyond the space of existing solutions. We look for solutions that will scale extraordinary well and that fit within our very tight latency constraints, which makes this problem particularly interesting.

- new features: feature engineering is one of the most complex tasks in machine learning; we spend a great deal of effort exploring the gigantic space of possible solutions in an efficient way.

- new distribution scheme: as we wrote above, we tried different approaches for distributing our machine learning algorithms such as Hadoop AllReduce or Apache Spark and we are actively looking into new approaches.

… and this is only a small subset of the incredible challenges we tackle at Criteo!

If you felt excited while reading this post, we have good news for you: we are hiring top-notch machine learning engineers and scientists! We are looking for fun, brilliant people who enjoy solving complex problems in cool offices downtown Paris (France) and Palo Alto (CA). If you think you’re one of those, take a look at our job postings.

And by all means, stay tuned and do look for us at NIPS or Recsys and many more to come if we missed each other recently in France at ICML or COLT! We’ll be there and thrilled to talk to you.

Damien Lefortier |

Olivier Koch |